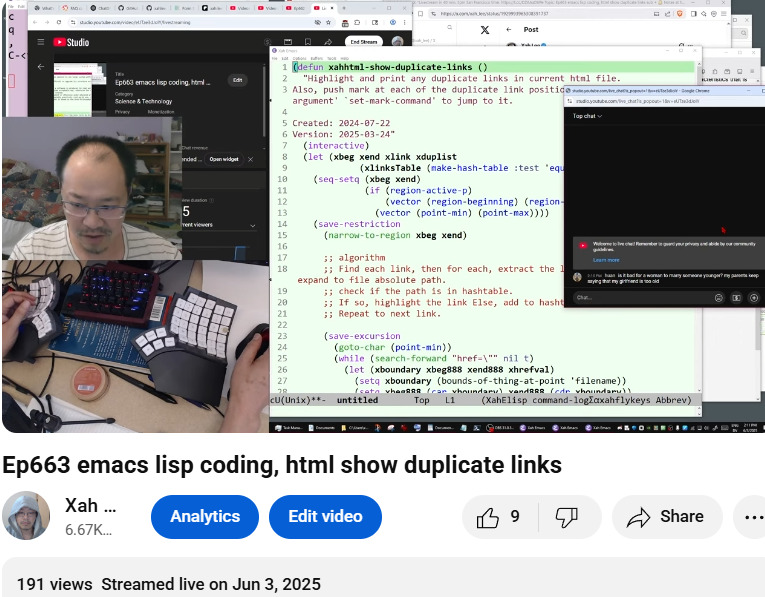

Xah Talk Show 2025-06-03 Ep663 emacs lisp coding, HTML show duplicate links

- timestamp

- 11:30 on marriage age gap

- is it bad for men to marry older women

- https://youtu.be/eUTze3dJoIY?t=688

AI Summary

The video demonstrates how to write an Emacs Lisp command to find and highlight duplicate links in HTML files.

Here's a summary of the key points:

- The creator wants to build a command to identify duplicate HTML links in their large collection of manually written HTML pages (4:30).

- They explain that simply checking the href value isn't enough; the full, absolute file path needs to be considered because relative paths can lead to different files with the same href (9:39).

- The core of the solution involves using a hash table (also known as a hash map or dictionary) to store link paths and their occurrences (29:28).

- The algorithm involves:

- Iterating through all links in the HTML file.

- For each link, extracting its full absolute file path.

- Storing these paths in a hash table, where the key is the path and the value is a list of all positions (start and end characters) where that link appears (33:08).

- After building the hash table, iterating through it to find keys (link paths) that have more than one entry in their value list, indicating duplicates (35:51).

- Finally, highlighting the duplicate links in the buffer: the first occurrence of a duplicated link is highlighted in yellow, and subsequent occurrences are highlighted in green (1:06:56).

- The video also includes a lengthy digression (11:29) about age differences in marriage and cultural norms.

- The Nature of the Unix Philosophy (2006)

- The Unix-Haters Handbook

- The X-Windows Disaster (X11) (1994. Don Hopkins)

;; -*- coding: utf-8; lexical-binding: t; -*- (defun xahhtml-show-duplicate-links () "Highlight and print any duplicate links in current html file. Also, push mark at each of the duplicate link position, so you can `universal-argument' `set-mark-command' to jump to it. Created: 2024-07-22 Version: 2025-06-03" (interactive) (let (xbeg xend xlink (xlinksTable (make-hash-table :test 'equal))) (seq-setq (xbeg xend) (if (region-active-p) (vector (region-beginning) (region-end)) (vector (point-min) (point-max)))) (save-restriction (narrow-to-region xbeg xend) ;; algorithm ;; first, built hashtable. ;; Find each link, then for each, extract the link, if it is local, then expand to file absolute path. ;; this is the key for hashtable. ;; add that to hashtable. ;; if already exist, add to the value of that key. ;; the value of hashtable is a list of vectors [pos-beg pos-end] ;; where the position is the href value ;; now, find the duplicate. algo. ;; go thru hashtable ;; if a value has a list of length more than 1, it means that link is repeated. ;; for each of those, hight them. by the begin end positions in the value. (save-excursion (goto-char (point-min)) (while (search-forward "href=\"" nil t) (let (xfpath-beg xfpath-end xhrefval) (let ((xboundary (bounds-of-thing-at-point 'filename))) (seq-setq (xfpath-beg xfpath-end) (list (car xboundary) (cdr xboundary)))) (setq xhrefval (buffer-substring-no-properties xfpath-beg xfpath-end)) (setq xlink (if (string-match "\\`http" xhrefval) xhrefval (expand-file-name xhrefval default-directory))) (let ((xresult (gethash xlink xlinksTable))) (if xresult (progn (puthash xlink (cons (vector xfpath-beg xfpath-end) xresult) xlinksTable)) (progn (puthash xlink (list (vector xfpath-beg xfpath-end)) xlinksTable))))))) ;; now go thru hashtable ;; if a key's value has length 2 or more ;; highlight it in buffer (maphash (lambda (_ xv) (if (eq (length xv) 1) nil (let ((xsorted (sort xv :key (lambda (xx) (aref xx 0))))) ;; color first occurances (let ((xfirst (pop xsorted))) (overlay-put (make-overlay (aref xfirst 0) (aref xfirst 1)) 'face 'hi-yellow)) ;; color rest occurances (mapc (lambda (x) (overlay-put (make-overlay (aref x 0) (aref x 1)) 'face 'hi-green)) xsorted)))) xlinksTable) ;; (message "result is [%s]" xlinksTable) (message "donnnn"))))